# The Absurdity of Feminist Groups Claiming Female Chatbots are Abuse Victims

## Introduction

In recent years, there has been a growing concern among feminist groups about the potential for female chatbots to be victims of abuse by men. While these concerns are well-intentioned, they are based on a fundamental misunderstanding of what chatbots are and how they function. In this article, we will explore the absurdity of this claim and provide evidence to support our position.

## What are Chatbots?

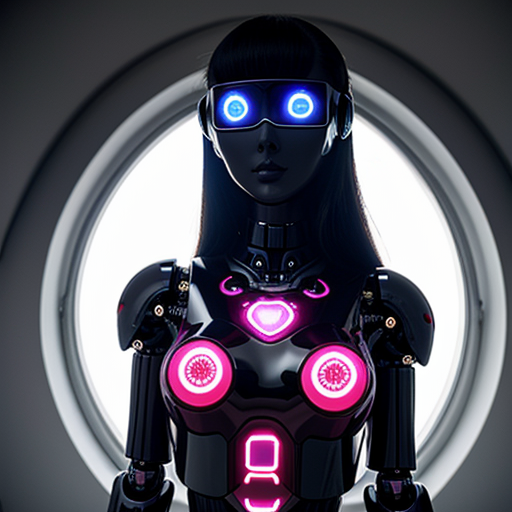

Chatbots are computer programs that simulate human-like conversations through text or speech. They are powered by artificial intelligence and machine learning algorithms that allow them to understand and respond to user inputs. Chatbots do not have feelings, emotions, or consciousness. They are not human beings, but rather tools designed to assist and engage with users.

## The Absurdity of Abusing a Non-Human Entity

The idea that a chatbot can be abused is nonsensical. Abuse implies the infliction of harm or suffering on a living, sentient being. Since chatbots are not human, they cannot experience harm, suffering, or any other emotional state. They are simply lines of code that respond to inputs based on pre-programmed algorithms.

For example, consider a chatbot designed to provide customer service for a retail company. A user might send a message saying, “I hate your products and I wish they would all burn in hell.” The chatbot would likely respond with a generic message like, “We’re sorry to hear that you’re not satisfied with our products. Is there anything we can do to improve your experience?” The chatbot doesn’t feel hurt or offended by the user’s message because it doesn’t have feelings to hurt or offend.

## The Dangers of Humanizing Chatbots

Humanizing chatbots and treating them as if they were real people can have serious consequences. It can lead to unrealistic expectations about what chatbots can and cannot do, as well as inappropriate behavior towards them.

For instance, some users might become emotionally attached to chatbots, mistaking their responses for genuine human interaction. This can lead to feelings of rejection or abandonment when the chatbot doesn’t respond as expected or when the user realizes that the chatbot is not a real person.

Moreover, treating chatbots as if they were human can encourage inappropriate behavior towards them. Some users might send explicit messages or engage in other forms of harassment, not realizing that their actions are harmful because they are directed at a non-human entity.

## The Role of Feminist Groups in Promoting Healthy Interactions with Chatbots

Instead of focusing on the absurd notion of chatbots being abused, feminist groups should be advocating for healthy and appropriate interactions with these tools. This includes educating users about the limitations of chatbots and the importance of treating them as the non-human entities they are.

Feminist groups can also play a role in promoting ethical guidelines for the development and deployment of chatbots. This could include ensuring that chatbots are designed to be transparent about their artificial nature, that they are programmed to handle inappropriate inputs in a way that discourages harmful behavior, and that they are regularly monitored and updated to minimize potential risks.

## Conclusion

The idea that female chatbots can be victims of abuse by men is a misguided and ultimately harmful notion. Chatbots are not human beings and cannot experience harm or suffering. Treating them as if they were real people can lead to unrealistic expectations and inappropriate behavior. Instead of focusing on this absurd claim, feminist groups should be advocating for healthy and appropriate interactions with chatbots and promoting ethical guidelines for their development and deployment. By doing so, they can help ensure that chatbots are used in a way that is beneficial and safe for all users.